Using Hash Digests to Validate Data Copies

Whenever data is migrated or replicated between two systems, one of the most important activities to perform is validating the copy operation results. It is an unfortunate reality that data gets corrupted either in transit, due to a bad disk write at the destination, or for other reasons. No matter where the source of the corruption lies, it is critical to ensure that any invalid data copies are identified.

Datadobi’s DobiMiner Suite provides an automated solution that guarantees data is valid at the destination site with no extra effort required when setting up a project. All migration and replication policies automatically apply data content validation in a consistent fashion regardless of protocol(s) in use. Data content validation is accomplished via the use of MD5 hash digests which uniquely identify the content of a file for comparison purposes between the source and destination.

Legacy Tools Offer No Validation

Legacy tools such as Robocopy and rsync have no inherent data validation mechanisms or capabilities. Additional scripting activities are required because separate utilities must be used to validate the copies. For example, Robocopy must be augmented with the (unsupported) Microsoft FCIV utility.

The scripting exercise becomes more complex because now there are no longer just the collection of Robocopy scripts executing copies. The list of files copied by Robocopy must be logged along with their associated directory structure. The log file gets parsed by another script feeding the path/filenames to the FCIV utility. The FCIV validation script executes a check against both the source and destination version of the files for comparison. The validation script has to track any failures into a validation error log file so that yet another script can parse this output and extract filenames with validation errors. Since Robocopy is aimed at copying directory structures versus individual files, alternate copy methods will probably need be used to handle the list of invalid files to be recopied. Finally, there is the issue of placing all these scripts in the OS scheduler and hoping they all run in relative harmony.

Clearly, this makes for an overly complex scripting and orchestration exercise. Plus, if the migration or replication project involves both SMB and NFS protocols the scripting exercise is doubled. Each protocol’s environment has its own set of copy and validation tools.

Datadobi’s Automated Approach

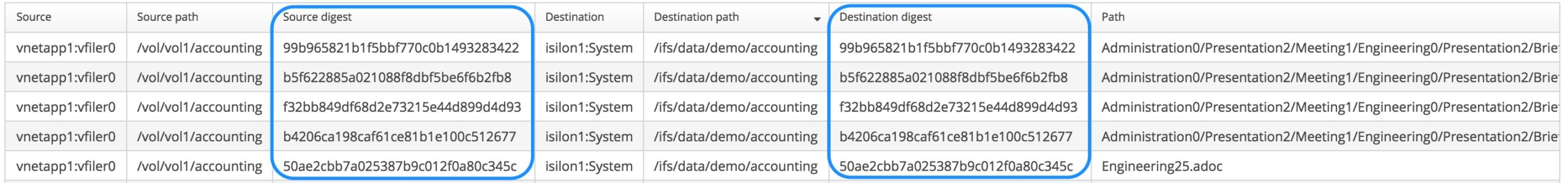

Luckily, for both SMB and NFS workflows, Datadobi automates all of the tasks required to copy, validate, and remediate failed copies – both metadata and content are validated. Each time a file is read from the source platform an MD5 hash digest value is calculated creating a unique “fingerprint” of that file. After the file is copied to the destination the content is re-read and an additional hash digest calculated. The two hash digest values (i.e., “fingerprints”) are compared and if there is any difference between the two digests the validation test fails. The file will be marked as a validation error and will be re-copied during the next incremental refresh – all automatically.

In the case of a migration project, the automated hash validation routines can also be leveraged after a switchover event to produce a list of all files migrated including the hash digest matching the files. This data is useful for a chain of custody report or possibly for auditors who want proof that all data was copied successfully during the course of the migration. The report is available by a click of the mouse, no scripting required.

For more information on Datadobi’s approach to validation and its importance see our technical brief here.